THine Value Establishing a framework that multilaterally supports embedding camera functions into systems to achieve great progress toward realization of “SWARM/IoT of cameras”

2019.02.12

- Article

- Column

In addition to smartphones and digital cameras, applications equipped with camera functions are rapidly increasing. And aside from automobiles, other products with built-in camera functions such as XR headsets, industrial equipment, medical equipment, educational equipment, and drones are also increasing. However, as we pointed out in the first and second articles of this series, embedding camera functions into applications is not an easy task as we need knowledge of both hardware and firmware. By using the “Camera Development Kit (CDK)” offered by THine Electronics, the effort of developing firmware can be dramatically reduced. However, the kit may not be enough for designers who are trying to embed camera functions for the first time. Therefore, THine Electronics initiated a new approach in addition to establishing a framework that multilaterally supports embedding camera functions into systems, by developing an Image Signal Processor (ISP) and CDK optimized for new applications.

Progress in establishing sensor networks

You may have heard of the term “SWARM.” It is a technical term that refers to a network of numerous sensors installed in the world where each sensor exchanges data wirelessly. Given the huge number of sensors like a swarm of insects or birds, the network was named SWARM by Professor Jan Rabaey of the University of California, Berkeley.

SWARM is basically synonymous with the term, “sensor network.” However, sensor network has been replaced by the term, “Internet of Things (IoT),” which is now a technical term used worldwide.

Great progress has been made in establishing sensor networks all over the world and SWARM, that is, IoT has gradually been realized. On the other hand, right behind various sensors, cameras also began being installed in various places. Installing camera functions on surveillance cameras, smartphones, automobiles, XR headsets, industrial equipment, medical equipment, educational equipment, and drones is nothing special anymore. Consequently, “SWARM of cameras” and “IoT of cameras” should eventually also become a reality.

SWARM is basically synonymous with the term, “sensor network.” However, sensor network has been replaced by the term, “Internet of Things (IoT),” which is now a technical term used worldwide.

Great progress has been made in establishing sensor networks all over the world and SWARM, that is, IoT has gradually been realized. On the other hand, right behind various sensors, cameras also began being installed in various places. Installing camera functions on surveillance cameras, smartphones, automobiles, XR headsets, industrial equipment, medical equipment, educational equipment, and drones is nothing special anymore. Consequently, “SWARM of cameras” and “IoT of cameras” should eventually also become a reality.

Cameras will offer wider appeal

Applications with camera functions are rapidly increasing. In the past, mobile devices and automobiles were the “two main users” of camera functions, although recently the situation has definitely changed as remarkable progress has been made in installing camera functions on such devices as XR headsets, industrial equipment, medical equipment, and educational equipment, and drones.

As for industrial applications, for example, camera functions are increasingly being utilized into virtual reality (VR), augmented reality (AR), and mixed reality (MR) headsets in maintenance work. By using AR for aircraft maintenance, the work situation can be captured on camera, with the photographed images being displayed on a head-mounted display, along with a manual displayed on a part of the display if necessary (Fig. 1). By using MR, three-dimensional (3D) objects can be displayed in the real space photographed by cameras and operated with hand movements or similar means. It is thus possible to perform virtual training on such maintenance work as turning valves without using real objects

As for industrial applications, for example, camera functions are increasingly being utilized into virtual reality (VR), augmented reality (AR), and mixed reality (MR) headsets in maintenance work. By using AR for aircraft maintenance, the work situation can be captured on camera, with the photographed images being displayed on a head-mounted display, along with a manual displayed on a part of the display if necessary (Fig. 1). By using MR, three-dimensional (3D) objects can be displayed in the real space photographed by cameras and operated with hand movements or similar means. It is thus possible to perform virtual training on such maintenance work as turning valves without using real objects

Fig. 1. Image of augmented reality (AR)

Cameras are becoming components

Various applications now have camera functions installed. Such a trend means that cameras are now becoming components. As this trend becomes more advanced, more diverse manufacturers will install camera functions on their devices.

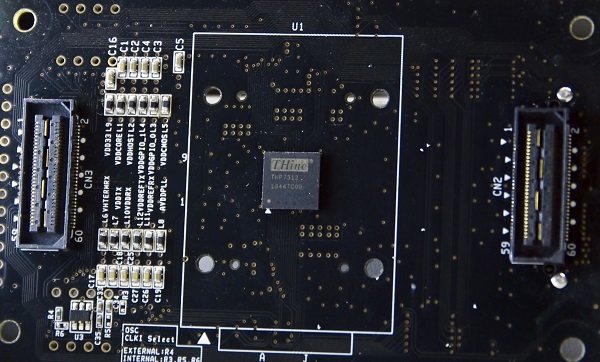

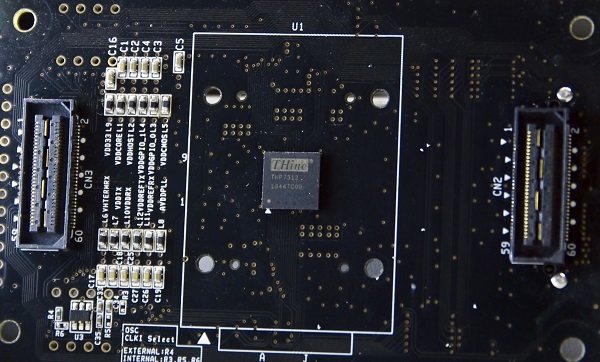

Incorporating camera functions, however, is not so easy. As we pointed out in the first and second articles of this series, incorporating camera functions requires a relatively high level of knowledge of both hardware and firmware in order to skillfully use an Image Signal Processor (ISP) (Fig. 2). And companies that are strong in hardware typically tend to encounter difficulty in developing embedded firmware, while companies well-versed in embedded development seem to struggle with hardware development

Moreover, as cameras become more like components and are adopted by various manufacturers, such manufacturers will include those that have developed past business in areas that have nothing to do with electronics. For example, the manufacturers of helmets may work on developing products equipped with VR/AR/MR functions, or the manufacturers of door mirrors for vehicles may replace their mirrors with cameras.

Such manufacturers are not expected to have accumulated much knowledge about hardware and firmware. Therefore, they will likely face difficulty incorporating camera functions. Their development may fail in the worst-case scenario, which should definitely be avoided.

Incorporating camera functions, however, is not so easy. As we pointed out in the first and second articles of this series, incorporating camera functions requires a relatively high level of knowledge of both hardware and firmware in order to skillfully use an Image Signal Processor (ISP) (Fig. 2). And companies that are strong in hardware typically tend to encounter difficulty in developing embedded firmware, while companies well-versed in embedded development seem to struggle with hardware development

Fig. 2. Image Signal Processor (ISP) “THP 7312”

Moreover, as cameras become more like components and are adopted by various manufacturers, such manufacturers will include those that have developed past business in areas that have nothing to do with electronics. For example, the manufacturers of helmets may work on developing products equipped with VR/AR/MR functions, or the manufacturers of door mirrors for vehicles may replace their mirrors with cameras.

Such manufacturers are not expected to have accumulated much knowledge about hardware and firmware. Therefore, they will likely face difficulty incorporating camera functions. Their development may fail in the worst-case scenario, which should definitely be avoided.

Building a value chain

THine Electronics has therefore focused its efforts on establishing a framework to support the development of embedded camera functions. Basically, THine Electronics plans to provide multifaceted support according to the situation of manufacturers that need support. The support system to be established roughly consists of three parts.

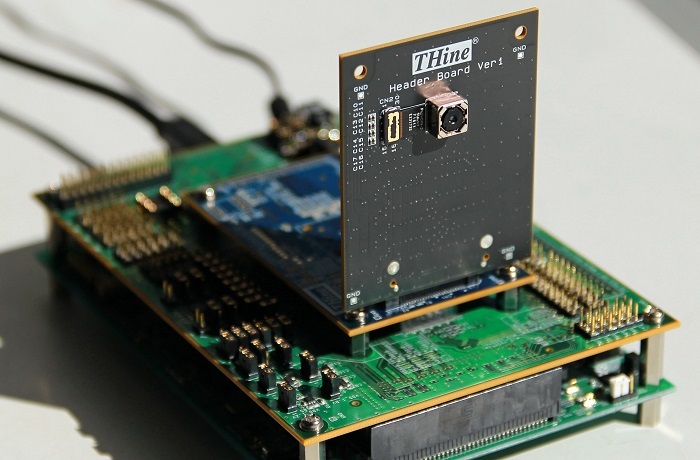

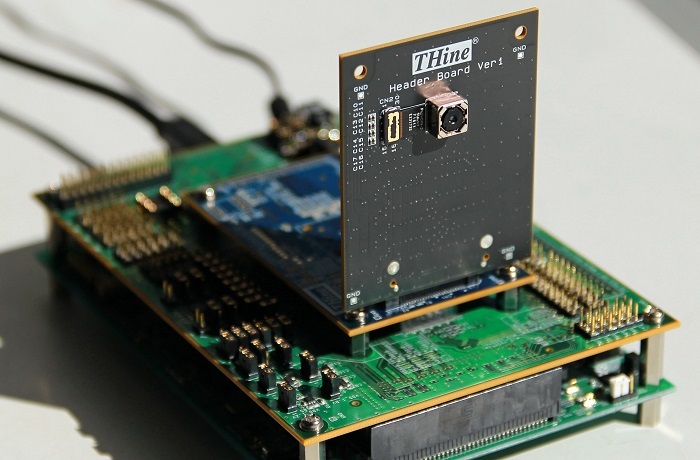

The first part is providing the Camera Development Kit (CDK) introduced in the first and second articles of this series. The CDK consists of the Software Development Kit (SDK), Evaluation Board (EVB), and GUI-based tool known as the THine Tuning Tool (3T) (Fig. 3)

With this kit, firmware can be generated automatically by simply setting parameters using the GUI-based tuning tool (Fig. 4). In other words, the kit enables the development of firmware with no programming or coding, which greatly lowers the barrier for firmware development

Extensive knowledge of cameras and image processing is necessary to skillfully use the CDK. And even with the CDK, programming work may be required, specifically in cases where each image sensor to be used has different register settings, thereby requiring the development of corresponding driver software. There is no other way to develop driver software if it cannot be obtained from external sources.

The second support system for users not confident in their knowledge about cameras and image processing or who lack sufficient programming experience is a web conference. THine Electronics has already built databases specifically for hardware and software development. By using these databases, the pitfalls that users may encounter during development can be assumed at the initial development stage, and appropriate support information will be provided accordingly. In most cases, issues can be solved with one short web conference.

In some cases, however, users may have issues that cannot be solved by a web conference. For such cases, the third support system for introducing system integrators specializing in incorporating camera functions to users comes in.

As THine Electronics provides ISPs and CDKs with a large number of users, it has contact points with various users that include the system integrators of camera functions. Moreover, THine Electronics licenses some of their driver software for image sensors as libraries, and there are cases where their users have licensed their driver software developed in the past. THine Electronics intends to serve as a bridge between system integrators/driver software developers and users having difficulty incorporating camera functions.

In fact, Katsumi Kuwayama, product manager of the camera engineering team at THine Electronics, asked users and supporters at a social gathering for the company's seminar: “Don't you think it would be fun if we could build a camera development community from Japan with CDK?” For that purpose, THine Electronics will continue to work hard toward “building a value chain” regarding the incorporation of camera functions.

The first part is providing the Camera Development Kit (CDK) introduced in the first and second articles of this series. The CDK consists of the Software Development Kit (SDK), Evaluation Board (EVB), and GUI-based tool known as the THine Tuning Tool (3T) (Fig. 3)

Fig. 3. Evaluation Board (EVB) included in the Camera Development Kit (CDK)

With this kit, firmware can be generated automatically by simply setting parameters using the GUI-based tuning tool (Fig. 4). In other words, the kit enables the development of firmware with no programming or coding, which greatly lowers the barrier for firmware development

It is possible to describe firmware by simply entering parameters required for the GUI-based tool “3T” displayed on a PC.

Fig. 4. Using the THine Tuning Tool (3T)

Extensive knowledge of cameras and image processing is necessary to skillfully use the CDK. And even with the CDK, programming work may be required, specifically in cases where each image sensor to be used has different register settings, thereby requiring the development of corresponding driver software. There is no other way to develop driver software if it cannot be obtained from external sources.

The second support system for users not confident in their knowledge about cameras and image processing or who lack sufficient programming experience is a web conference. THine Electronics has already built databases specifically for hardware and software development. By using these databases, the pitfalls that users may encounter during development can be assumed at the initial development stage, and appropriate support information will be provided accordingly. In most cases, issues can be solved with one short web conference.

In some cases, however, users may have issues that cannot be solved by a web conference. For such cases, the third support system for introducing system integrators specializing in incorporating camera functions to users comes in.

As THine Electronics provides ISPs and CDKs with a large number of users, it has contact points with various users that include the system integrators of camera functions. Moreover, THine Electronics licenses some of their driver software for image sensors as libraries, and there are cases where their users have licensed their driver software developed in the past. THine Electronics intends to serve as a bridge between system integrators/driver software developers and users having difficulty incorporating camera functions.

In fact, Katsumi Kuwayama, product manager of the camera engineering team at THine Electronics, asked users and supporters at a social gathering for the company's seminar: “Don't you think it would be fun if we could build a camera development community from Japan with CDK?” For that purpose, THine Electronics will continue to work hard toward “building a value chain” regarding the incorporation of camera functions.

Summarizing and utilizing users' voices for development of next-generation products

As described above, THine Electronics has established relationships with many users who need camera functions through the provision of ISPs and CDKs. Through these relationships, THine Electronics has quick access to the “voices” of various types of users.

Of course, the requirements for camera functions differ depending on the application. For single-lens reflex cameras, taking beautiful photographs is most important. In medical applications, however, priority is given to enabling the discovery of disease with certainty, rather than taking beautiful photographs. The differences between normal tissues and lesions should be clearly recorded. Different specific first priorities exist for XR terminals and industrial applications as well. Moreover, such first priorities change and evolve day by day.

In other words, camera functions were originally incorporated from two axes; mobile equipment and automobiles. Now, it has changed to the n-axis due to the addition of medical applications, industrial applications, XR terminals, and other applications. It is therefore necessary to optimize the ISP and CDK according to these changes.

THine Electronics has already undertaken activities in response to the voices of various users toward the development of next-generation ISPs and CDKs. At present for the CDK, THine Electronics is developing new functions for medical, digital health, and XR headsets. For some of these functions, trials with priority users have already begun.

Meanwhile, next-generation products are also being considered for the ISP. Concerning such next-generation products, in addition to supporting a higher frame rate for high resolution such as 8K (7,680 × 4,320 pixels) as well as the current 4K (3,840 × 2,160 pixels), installing new functions for new applications is also being considered. For example, a function to extend the dynamic range, and the enhancement of functions and performance of “3A” consisting of Auto Focus (AF), Auto Exposure (AE), and Auto White Balance (AWB) are being considered.

Realizing some of these new functions with dedicated hardware also make it possible to significantly facilitate software processing on the CNN of edge computers and the GPU of cloud computers at a later stage, and to improve performance while suppressing the ISP chip size and power consumption. Currently, the division of roles concerning such hardware and software is under consideration with partner companies.

Of course, the requirements for camera functions differ depending on the application. For single-lens reflex cameras, taking beautiful photographs is most important. In medical applications, however, priority is given to enabling the discovery of disease with certainty, rather than taking beautiful photographs. The differences between normal tissues and lesions should be clearly recorded. Different specific first priorities exist for XR terminals and industrial applications as well. Moreover, such first priorities change and evolve day by day.

In other words, camera functions were originally incorporated from two axes; mobile equipment and automobiles. Now, it has changed to the n-axis due to the addition of medical applications, industrial applications, XR terminals, and other applications. It is therefore necessary to optimize the ISP and CDK according to these changes.

THine Electronics has already undertaken activities in response to the voices of various users toward the development of next-generation ISPs and CDKs. At present for the CDK, THine Electronics is developing new functions for medical, digital health, and XR headsets. For some of these functions, trials with priority users have already begun.

Meanwhile, next-generation products are also being considered for the ISP. Concerning such next-generation products, in addition to supporting a higher frame rate for high resolution such as 8K (7,680 × 4,320 pixels) as well as the current 4K (3,840 × 2,160 pixels), installing new functions for new applications is also being considered. For example, a function to extend the dynamic range, and the enhancement of functions and performance of “3A” consisting of Auto Focus (AF), Auto Exposure (AE), and Auto White Balance (AWB) are being considered.

Realizing some of these new functions with dedicated hardware also make it possible to significantly facilitate software processing on the CNN of edge computers and the GPU of cloud computers at a later stage, and to improve performance while suppressing the ISP chip size and power consumption. Currently, the division of roles concerning such hardware and software is under consideration with partner companies.

Related Contents

- A new development environment for camera systems: CDK will solve problems caused by the fusion of cameras and AI

- Details of GUI-based tuning tool of Camera Development Kit (CDK) for greatly enhanced ISP performance with no firmware coding

- Developed an automatic generation tool for ISP firmware that is no longer dependent on FPGA